Pioneering Edge Computing in the 1970s - "A Little Intel 8008 Computer"

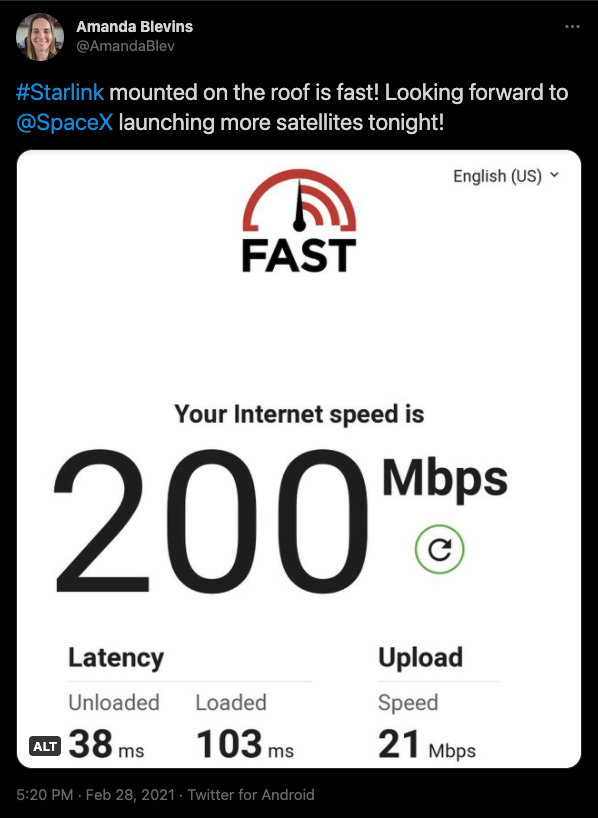

Hello everyone! The post below was not written by me. It was written by my Dad, Charles (Chuck) Blevins. I’m proud to share it because (1) it is super cool tech, especially for the mid 1970s! and (2) after reading it, I realized not only did my Dad teach me so much about computers when I was a kid, but I also have the same curiosity and grit he showed throughout his academic and professional career in my own life and professional career.

This post has something for everyone - solving a new a complex problem, hardware design, chip architecture, custom logic, humor, and inspiration! I hope you enjoy reading it as much as I did.

In the fall of 1974 I began my formal career in electrical engineering. I majored in chemistry as an undergraduate and for a Master’s degree. But after working a few years as a chemist and even inventing an electronic device for measuring the pasting temperature of modified corn starch, I knew that I wanted to switch fields and develop electronic hardware. Hence, I enrolled in the Ph.D. program at the University of Arkansas.

I told them that I wanted to learn about microprocessors. The professors agreed that they too wanted to know more about microprocessors. Intel had made a big splash with the 4004 and 4040 a couple of years earlier. Now the 8008 microprocessor was available and there were rumblings about the venerable 8080 microprocessor. I chose the 8008 microprocessor as it was easy to get. I chose the Intel 1101 SRAM as it was available and the least expensive. However, the total cost of these parts was about $200 back then. Since I didn’t have an application in mind when I started the design and having no computer experience, I didn’t realize how little memory 256 bytes was.

I had to design the circuit and layout and etch the circuit board. If you look at the 8008 datasheet, the most obvious thing is that there are only 14 pins. I had to add latches to capture the upper and lower address bits. This requires that the three state bits must be decoded. I used a SN74138 3to8 decoder to track the state machine. The processor state machine has 5 states to execute an instruction fetch, data read or data write if no wait states are inserted. The upper address bits are latched on the T2 state. The two most significant bits must be decoded to determine if memory or I/O space is being accessed. The bits also decode whether the data is being read or written. The decoder consists of SSI AND, NAND, and inverters like the SN7408, SN7400 and SN7406. (Note that other logic devices have 3 or 4 inputs and decode with fewer devices.) Modern processors have multiple states but these are hidden in a pipeline. They also supply memory read/write and I/O read/write signals. They also have more pins per device so that address and data lines aren’t multiplexed. One very important lesson from examining the 8008 is that it is a state machine and from extension, later processors are just more complex state machines. (As a personal aside, state machines are very important when implementing complex logic with field programmable gate arrays (FPGAs). FPGAs first appeared about 1985 and are my favorite devices when designing custom logic.)

Considering that the clock rate was 500 kHz and two clocks per state meant that the minimum instruction execution time was 20 microseconds. Instructions that needed data required 40 microseconds. Although this is very slow by modern standards, it is fast enough to be useful and that will be apparent later in the story.

My computer did not have any non-volatile memory, any program had to be entered manually before releasing the processor. I had 8 switches to set the SRAM address and 8 more to set the data. A data load or write switch entered the data. Since I did not have a compiler or assembler, I manually converted my assembly level code to machine instructions. By December 1974 I had a computer but no application. Other students had been developing a weather station that would monitor weather and flooding on the White River. I don’t remember which governmental agency wanted the data. The White River was the only waterway in Arkansas that wasn’t dammed and controlled by the Army Corp of Engineers. When everything was ready to deploy, someone realized that the magnetic tape cassette would be filled with data in little over a week. Since it would be a day long trip to drive 150 miles to the selected site on the river, launch a small boat to get to the site, exchange the tape, boat back to the car, and drive back to Little Rock, it was determined that recording data 4 times faster than the current design would make it possible to service the site only once per month. The designer of the recording hardware said that the 4x speed up was not a problem. The designer of the playback unit which also punched paper tape via a teletype machine could not read the data at 4x speed. After hearing about the issue, I decided to see if my computer could read the mag tape and punch the paper tape. Paper tape was the medium that the customer wanted.

Of course, these tasks are I/O. After looking at the digital waveform from some of the tape read hardware, I realized that the computer could count the periods of the high and low signal and decode the data. The computer could also start and stop the tape drive as needed. The computer had enough memory to store a record of one set of readings. Punching the paper tape was a more straightforward task. The binary data needed to be converted to hexadecimal ASCII characters and transmitted serially at 110 baud to the teletype machine. However, there was no UART available to me. The computer program sent the characters by bit banging. A software timing loop was used to set the bit rate. Start and stop bits were prepended and appended to the ASCII characters to complete the formatting. An I/O instruction sent a bit to an RS232 driver. A second printed circuit board held the I/O hardware and was connected to the computer board by many discrete wires. The program was only about 69 instructions long. The program read a record from the mag tape, paused the tape, converted the data to ASCII and sent the data to the teletype, started the tape to read the next record. There was no error handling or end of tape recognition. The setup of the teletype machine was completely manual. Most importantly, it worked and showed everyone the flexibility and utility of small computers. The department was just getting its first minicomputer. This was a very big deal. Computers were just coming online outside of the university data center.

Since my major professor was in charge of the project and we were great friends, we usually serviced the weather station. It was fun boating on the river except for the mosquitoes. When the river was flooding, we could boat all the way to the tree where the hardware was strung up. A long plastic pipe served as a float for measuring the depth of the flood water. Flood data was measured in feet rather than inches. I don’t know details of the measurement electronics because I didn’t do any of the design. One time we brought the mag tape to the lab and found that no data was recorded. We made another day trip to the river and brought the measurement electronics back to the lab. The electronics were encased in a .30 caliber steel ammo box. These boxes are waterproof. The failed component was replaced, and the hardware tested okay. It was returned to the river. We decided to check it in a week rather than a month.

My professor asked if the computer was portable. I decided that a large 12V car battery could replace the power supply. Hence, we could take the computer to the river. The circuit boards with their interconnecting wires and I/O cables were laid in the back of his hatchback along with the battery. We drove to the river and fetched the magnetic tape. As we were pulling the boat up the launch ramp, a game warden showed up. He wanted to see our fishing licenses and our catch. We explained that we weren’t fishing. He was incredulous and looked more than a little spooked when we showed him the computer in the car. After he left, I hooked up the battery to the computer and it lit up okay. Whew!

Who knew how it would survive the ride to the river. I could tell by how the LEDS were sequencing and the tape drive being started and stopped that the computer was reading valid data. We returned to the lab having field tested one of the first portable computers. It wasn’t pretty and it wasn’t rugged but that computer and those experiences remain fond memories.

I have lived my professional life by taking on challenges that I have no experience solving. No one in the Department of Electronics and Instrumentation nor I had ever built a computer. I didn’t worry about failure. I don’t think that I have ever failed to deliver the product, but I have made many mistakes as I was learning something new. I hope whoever reads this anecdote feels the fun and excitement of doing something new. Mistakes are small failures on the path to learning and succeeding. May you enjoy your life and your work product as much as I have.